[ad_1]

In just six months, generative AI has made significant strides, capturing the attention of the world. ChatGPT, a prominent example of this technology, quickly gained popularity and widespread use. However, renowned expert Ross Anderson warns about the potential risks associated with the intellectual decline of future generations of models.

Ross Anderson is a pioneer in safety engineering and a leading authority in finding vulnerabilities in security systems and algorithms. As a Fellow of the Royal Academy of Engineering and a Professor at the University of Cambridge, he has contributed extensively to the field of information security, shaping threat models across various sectors.

Now, Anderson raises the alarm about a global threat to humanity—the collapse of large language models (LLMs). Until recently, most of the text on the internet was generated by humans. LLMs have now become the primary tool for editing and creating new texts, replacing human-generated content.

This shift raises important questions: Where will this trend lead, and what will happen when LLMs dominate the internet? The implications go beyond text alone. For instance, if a musical model is trained with compositions by Mozart, subsequent generations may lack the brilliance of the original and produce inferior results, comparable to a musical “Salieri.” With each subsequent generation, the risk of declining quality and intelligence increases.

This concept may remind you of the movie “Multiplicity,” starring Michael Keaton, where cloning leads to a decline in intelligence and an increase in the stupidity of each subsequent clone.

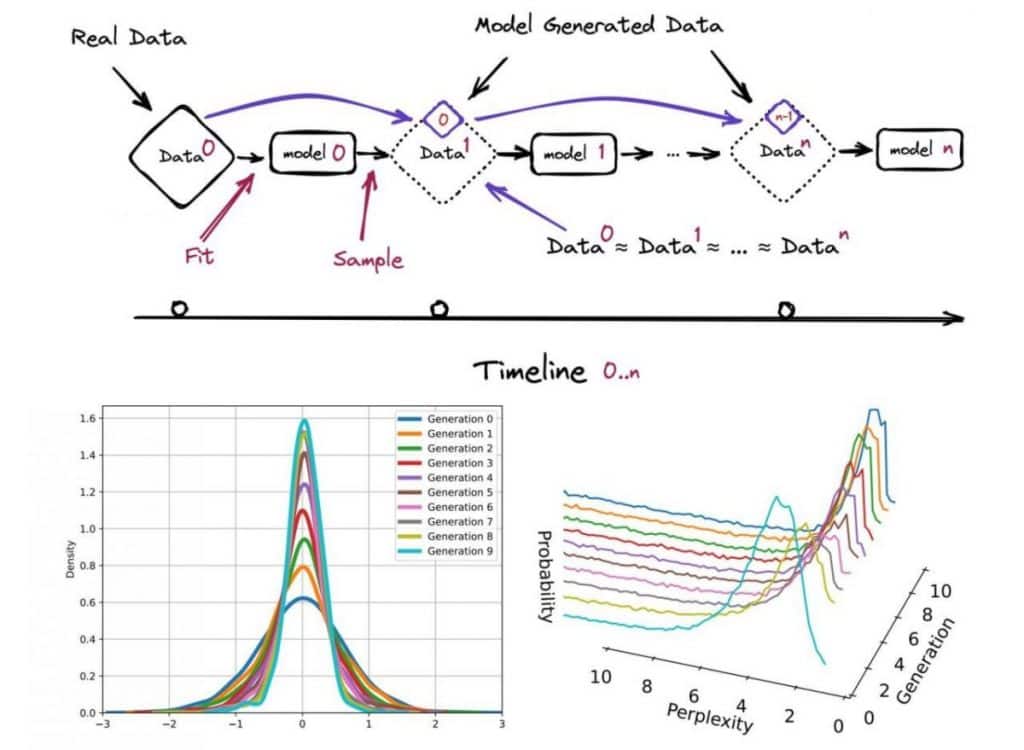

The same phenomenon can occur with LLMs. Training a model with model-generated content leads to irreversible defects and the degradation of text quality over generations. The original distribution of content becomes distorted, resulting in an influx of nonsensical information. Gaussian distributions converge, and in extreme cases, text may become meaningless. Subsequent generations may even misperceive reality based on the mistakes made by their predecessors. This phenomenon is known as “model collapse.”

The consequences of model collapse are significant:

Fortunately, there is hope. Anderson suggests that model collapse can be prevented, offering a glimmer of optimism amid the concerns. To learn more about potential solutions and how to avoid the decline of online information quality, we encourage you to explore further.

While generative AI holds promise, it is essential to remain vigilant and address the challenges it presents. By understanding and mitigating the risks associated with model collapse, we can work towards harnessing the benefits of this technology while preserving the integrity of information on the internet.

Read more about AI:

[ad_2]

Read More: mpost.io

Bitcoin

Bitcoin  Ethereum

Ethereum  Tether

Tether  XRP

XRP  Solana

Solana  USDC

USDC  TRON

TRON  Dogecoin

Dogecoin  Lido Staked Ether

Lido Staked Ether  Cardano

Cardano  Wrapped Bitcoin

Wrapped Bitcoin  Hyperliquid

Hyperliquid  Wrapped stETH

Wrapped stETH  Sui

Sui  Bitcoin Cash

Bitcoin Cash  Chainlink

Chainlink  LEO Token

LEO Token  Stellar

Stellar  Avalanche

Avalanche  Toncoin

Toncoin  USDS

USDS  WhiteBIT Coin

WhiteBIT Coin  Shiba Inu

Shiba Inu  Wrapped eETH

Wrapped eETH  WETH

WETH  Litecoin

Litecoin  Binance Bridged USDT (BNB Smart Chain)

Binance Bridged USDT (BNB Smart Chain)  Hedera

Hedera  Monero

Monero  Ethena USDe

Ethena USDe  Polkadot

Polkadot  Bitget Token

Bitget Token  Coinbase Wrapped BTC

Coinbase Wrapped BTC  Uniswap

Uniswap  Pepe

Pepe  Pi Network

Pi Network  Aave

Aave  Dai

Dai  Ethena Staked USDe

Ethena Staked USDe  Bittensor

Bittensor  OKB

OKB  BlackRock USD Institutional Digital Liquidity Fund

BlackRock USD Institutional Digital Liquidity Fund  Aptos

Aptos  Internet Computer

Internet Computer  Cronos

Cronos  Jito Staked SOL

Jito Staked SOL  NEAR Protocol

NEAR Protocol  sUSDS

sUSDS  Ethereum Classic

Ethereum Classic