Following up on a reader's comment to my rant about ChatGPT totally botching my writing, I went with their suggestion to try Microsoft's Copilot instead. I asked the same question, "What is Wagner James Au's definition of the Metaverse?" and got this output:

Wagner James Au defines the Metaverse as a vast, immersive virtual world simultaneously accessible by millions of people through highly customizable avatars and powerful experience creation tools integrated with the offline world through its virtual economy and external technology. His book, “Making a Metaverse That Matters: From Snow Crash & Second Life to A Virtual World Worth Fighting For,” explores nascent metaverse platforms, interviews metaverse creators like Neal Stephenson, and aims to dispel myths and misconceptions around this interconnected universe of virtual worlds. In essence, the Metaverse represents a convergence of digital and physical experiences, where users can interact, create, and explore within a shared digital space.

Much better than ChatGPT! I also like how Copilot's output includes source web links for further reading, enforcing its role as your assistant, and not the ultimate purveyor of truth.

Still, Copilot is decidedly not perfect, adding in bizarre inaccuracies:

I rarely write about the Metaverse being "a convergence of digital and physical experiences" (though others emphasize that angle much more), and I've devoted thousands of words explaining why the Metaverse should not be defined as an "interconnected universe of virtual worlds". Worst of all, putting Neal Stephenson in the "metaverse creators like" category is so profoundly, face-palmingly wrong, if I were teaching a class on the topic and a student wrote that in a paper, I'd deduct a whole grade or two.

So overall I still question the usefulness of LLMs beyond being a highly imperfect, unreliable assistant. Anyway, here's the comment from reader "N" who makes some good points and even shows how Copilot is pretty impressive with discussing Second Life-only content:

It never ceases to amaze me how some people persist with this bullshit, using a LLM as a substitute for Google or as a database for factual information. Especially when the LLM in question is an outdated model and running an old application that doesn't even have web search capabilities, nor RAG for grounding. By the way, Copilot Precise, as a search engine assistant, found your definition and quoted it verbatim, even providing a link to the source.

Mitch Wagner, instead, provided also a few examples of what (even) ChatGPT is actually useful for (and there are many more) and correctly said it's not reliable as information source. That "ANN/LLMs hallucinate" is know since forever. Also a LLM with few billion parameters can't physically hold a 15 trillion token dataset. It's incorrect to label hallucinations as lies, though: "they lie" implies an intentional act of deception, whereas LLMs fill in the gaps with "hallucinated" information. Do you lie when your brain reconstructs what your blind spot can't see, based on the average surroundings?

Also I find it interesting that Mitch Wagner said 'ChatGPT was faster' than getting information from Google. Sometimes, when searching for technical or obscure information, you have to rummage through numerous search results and websites. GPT-4, which powers Copilot Precise, might already have a decent idea of what the solution is, maybe a lacking one, but it knows what to search for, then it uses its search tool and finds it or at least puts you on the right track. Also you can ask for something more complex than trivial plain searches. Copilot and Perplexity saved me quite a bit of time, multiple times.

You might be interested in an example of multi-search for Second Life: "What are the most trendy Second Life female mesh bodies as of 2024? Please list them and for each of them search for their respective inworld store in secondlife.com Destinations." In few seconds, Copilot Precise correctly listed Maitreya Lara and LaraX, eBody Reborn, and Mesbody Legacy, found their stores in Destinations and linked them. You can also ask it to make a comparative table. It's not always perfect, but it's pretty handy.

I won't take ChatGPT and its old GPT-3.5, as the state-of-the-art, let alone use it as a benchmark to evaluate the current advancements in AI research. Today, even compact models like PHI-3 and LLama 3 8b, which can run locally on a laptop or a high-end smartphone, are nearly on par with GPT-3.5 in many tasks. In the meantime, while GPT-4 continues to be helpful for coding, models like Gemini 1.5 Pro and Claude 3 Opus seem better than GPT-4 in generating (and writing) fiction ideas or brainstorming. Gemini has a huge context window and can write a critique of a novel. Llama 3 400b is about to be released and it looks like OpenAI is releasing a new model this year.

It's improving and improving. I don't think they would be on par with a senior software engineer or a professional writer or poet until we develop an actual strong AI or AGI (or at least a good introspection, the labs are working on planning at least), but vice-versa I won't say they are useless either or only focus on the negative.

Of course GPT-3.5 powering ChatGPT hasn't been updated: OpenAI, obviously, wouldn't waste millions to retrain that older model, even less for just minor facts that aren't repeated enough times in the training dataset and can't be fit inside its limited amount of parameters anyway, let alone for the sake of the wrong use in the wrong application… when their newer models (or even GPT-3.5 itself) are already doing that on better suited applications such as Perplexity or Copilot.

I'd quibble that "LLMs hallucinate" is more accurate than saying "LLMs lie", since a hallucination implies there's already a base stable awareness where none actually exists. Then again, pretty much any verb we use related to AI (thinks, decides, chooses, etc. etc.) implies some level of human-type sentience that's simply not there at all.

Read More: nwn.blogs.com

Bitcoin

Bitcoin  Ethereum

Ethereum  Tether

Tether  XRP

XRP  Solana

Solana  USDC

USDC  Dogecoin

Dogecoin  TRON

TRON  Cardano

Cardano  Lido Staked Ether

Lido Staked Ether  Wrapped Bitcoin

Wrapped Bitcoin  Hyperliquid

Hyperliquid  Sui

Sui  Wrapped stETH

Wrapped stETH  Chainlink

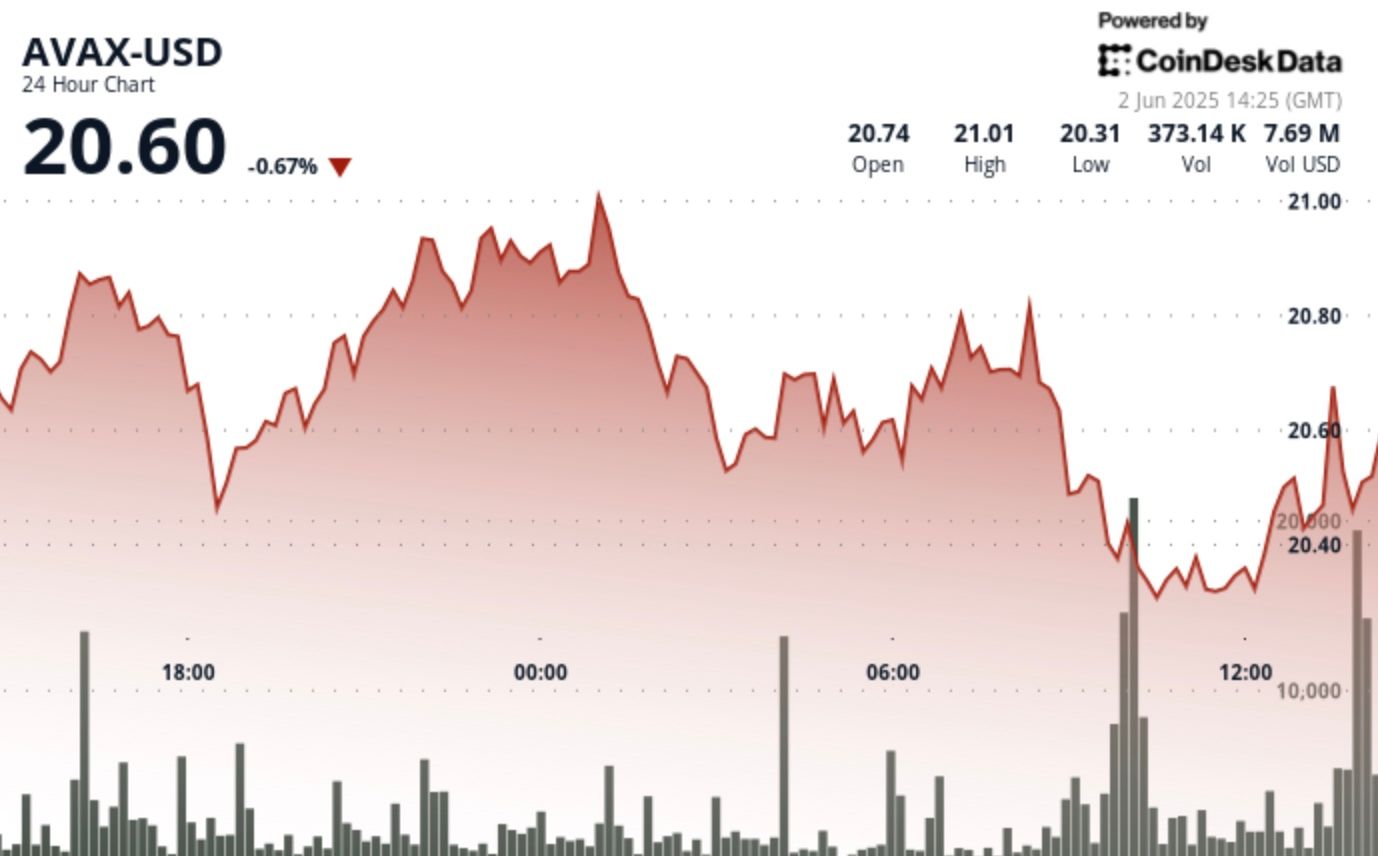

Chainlink  Avalanche

Avalanche  Stellar

Stellar  Bitcoin Cash

Bitcoin Cash  Toncoin

Toncoin  LEO Token

LEO Token  Shiba Inu

Shiba Inu  USDS

USDS  Hedera

Hedera  Monero

Monero  WETH

WETH  Litecoin

Litecoin  Wrapped eETH

Wrapped eETH  Polkadot

Polkadot  Binance Bridged USDT (BNB Smart Chain)

Binance Bridged USDT (BNB Smart Chain)  Ethena USDe

Ethena USDe  Bitget Token

Bitget Token  Pepe

Pepe  Pi Network

Pi Network  WhiteBIT Coin

WhiteBIT Coin  Coinbase Wrapped BTC

Coinbase Wrapped BTC  Dai

Dai  Aave

Aave  Uniswap

Uniswap  Bittensor

Bittensor  Ethena Staked USDe

Ethena Staked USDe  Cronos

Cronos  Aptos

Aptos  OKB

OKB  NEAR Protocol

NEAR Protocol  BlackRock USD Institutional Digital Liquidity Fund

BlackRock USD Institutional Digital Liquidity Fund  Jito Staked SOL

Jito Staked SOL  Internet Computer

Internet Computer  Ondo

Ondo  Ethereum Classic

Ethereum Classic