[ad_1]

Meta has recently released a set of LLaMa-2-Chat models in various sizes. This release from the LLM department of GenAI has created a buzz in the industry.

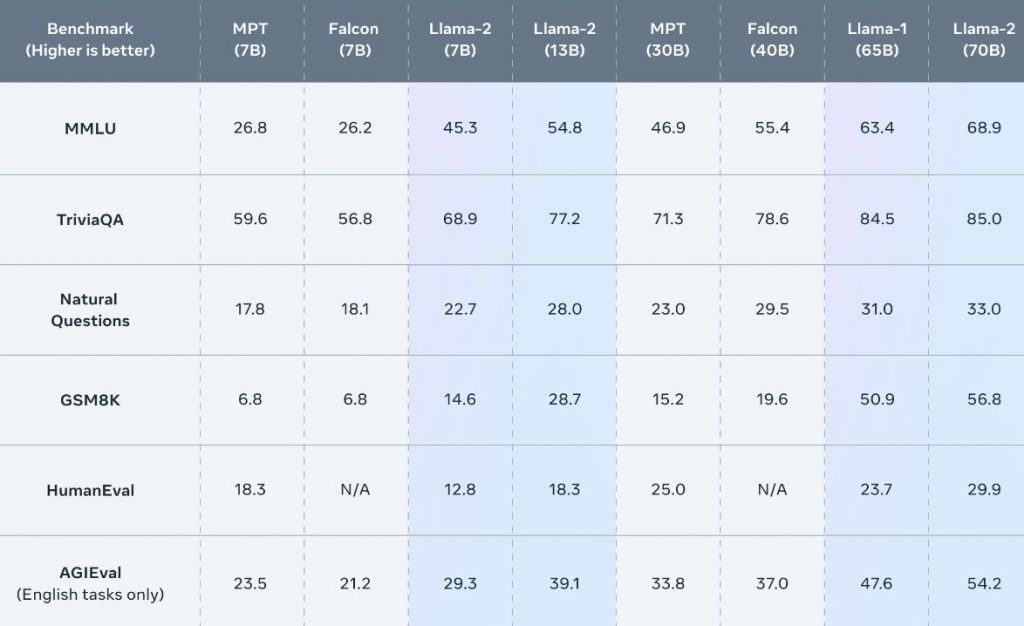

LLaMa-2-Chat is a truly remarkable achievement created by the talented team at GenAI’s LLM department. With impressive parameters count of 70 billion, this model is comparable to and even outperforms the highly regarded GPT-3.5 on certain benchmarks.

Highlights:

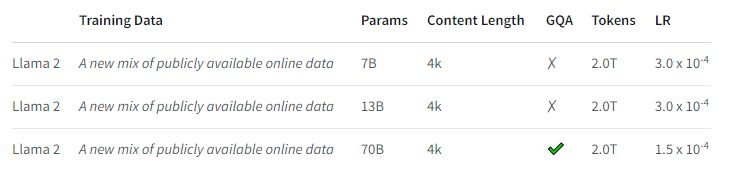

- Commercial friendly

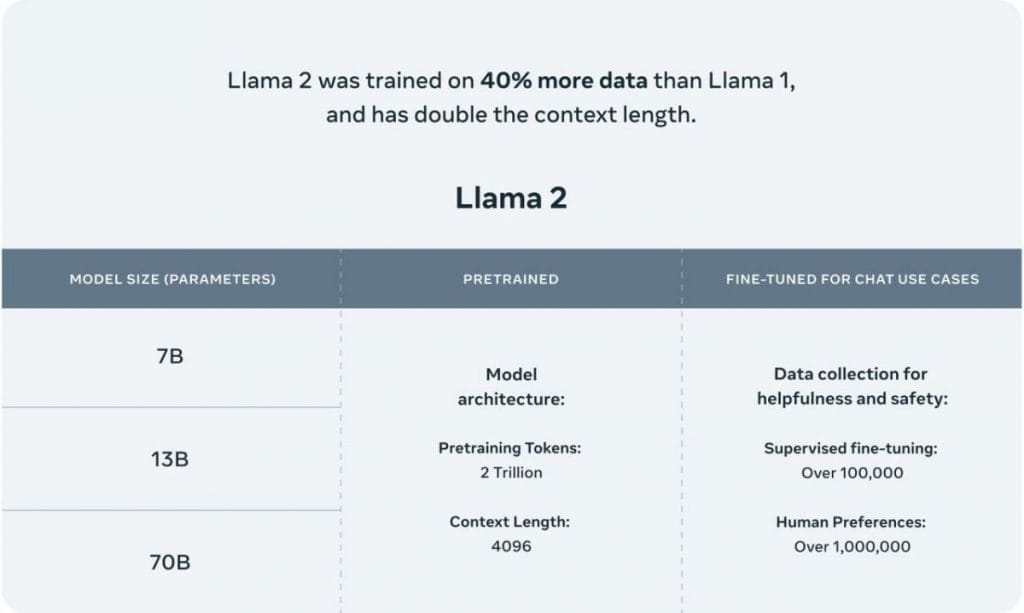

- Pretrained on 2T tokens

- Strong MMLU scores (i.e. strong reasoning)

- 4K context

- (Extendable) RoPE embeddings

- Coding performance is meh

- SFT/RLHF chat versions

The fact that LLaMa-2-Chat is the first model of its size to be fine-tuned using RLHF (Reinforcement Learning from Human Feedback) makes it even more remarkable. In an unprecedented move, Meta has made this model completely free for commercial use. Those interested can request the download link from their official website.

One of the most significant advantages of LLaMa-2-Chat is its potential to create ChatGPT analogues without the need to share any data with OpenAI. This empowers developers and researchers to harness the power of the model while maintaining complete control over their data. You can download new model here.

HUGE AI NEWS!!!

Llama 2 just came out! And guess what? It’s fully open-source and can be used for commercial purposes!!! 7-70B parameters are supported.

They also release fine-tuned variants optimized for dialogue use cases (LLaMA 2-Chat)!

The paper looks super detailed – 76… pic.twitter.com/yZahl7Jzya

— Aleksa Gordić

(@gordic_aleksa) July 18, 2023

In terms of human evaluation metrics, LLaMa-2-Chat stands shoulder-to-shoulder with ChatGPT-3.5 in terms of quality. Notably, it showcases exceptional performance on mathematical problems, outperforming other models in this domain.

- In February, Meta has released LLaMA model, a large language model designed to support AI researchers. Available in various sizes (7B, 13B, 33B, and 65B parameters), LLaMA allows researchers to test new approaches and explore new use cases. The model is ideal for fine-tuning tasks and is built on a large set of unlabelled data. Despite its versatility, LLaMA faces risks of bias, toxic comments, and hallucinations. The model is released under a non-commercial license focused on research use cases, and eligibility for access is evaluated on a case-by-case basis.

- The LLaMa model, with 7 billion parameters, has achieved lightning-fast inference on a MacBook with the M2 Max chip. This achievement was made possible by Greganov’s successful implementation of model inference on the Metal GPU, a specialized accelerator found in Apple’s latest chips. The LLaMa model demonstrates 0% CPU utilization, harnessing the processing power of all 38 Metal cores. This vision of personalized AI assistance and localization on personal devices holds immense potential for a future where AI becomes an integral part of people’s lives, providing personalized assistance and streamlining routine tasks.

Read more about AI:

[ad_2]

Read More: mpost.io

Bitcoin

Bitcoin  Ethereum

Ethereum  Tether

Tether  XRP

XRP  Solana

Solana  USDC

USDC  TRON

TRON  Dogecoin

Dogecoin  Lido Staked Ether

Lido Staked Ether  Cardano

Cardano  Wrapped Bitcoin

Wrapped Bitcoin  Hyperliquid

Hyperliquid  Wrapped stETH

Wrapped stETH  Sui

Sui  Bitcoin Cash

Bitcoin Cash  LEO Token

LEO Token  Chainlink

Chainlink  Stellar

Stellar  Avalanche

Avalanche  Toncoin

Toncoin  USDS

USDS  WhiteBIT Coin

WhiteBIT Coin  Shiba Inu

Shiba Inu  WETH

WETH  Wrapped eETH

Wrapped eETH  Litecoin

Litecoin  Binance Bridged USDT (BNB Smart Chain)

Binance Bridged USDT (BNB Smart Chain)  Hedera

Hedera  Monero

Monero  Ethena USDe

Ethena USDe  Polkadot

Polkadot  Bitget Token

Bitget Token  Coinbase Wrapped BTC

Coinbase Wrapped BTC  Uniswap

Uniswap  Pepe

Pepe  Pi Network

Pi Network  Aave

Aave  Dai

Dai  Ethena Staked USDe

Ethena Staked USDe  Bittensor

Bittensor  OKB

OKB  BlackRock USD Institutional Digital Liquidity Fund

BlackRock USD Institutional Digital Liquidity Fund  Aptos

Aptos  Cronos

Cronos  Internet Computer

Internet Computer  NEAR Protocol

NEAR Protocol  Jito Staked SOL

Jito Staked SOL  sUSDS

sUSDS  Ethereum Classic

Ethereum Classic