A groundbreaking achievement in the field of AI has been unveiled as the LLaMa model with an astonishing 7 billion parameters now runs at an impressive speed of 40 tokens per second on a MacBook equipped with the cutting-edge M2 Max chip. This remarkable feat was made possible through a recent update to the GIT repository by Greganov, who successfully implemented model inference on the Metal GPU, a specialized accelerator found in Apple’s latest chips.

The implementation of model inference on the Metal GPU has yielded extraordinary results. Utilizing this special hardware, the LLaMa model demonstrates an astounding 0% CPU utilization, effectively harnessing the processing power of all 38 Metal cores. This achievement not only showcases the capabilities of the model but also highlights the exceptional skill and expertise of Greganov as a remarkable engineer.

The implications of this development are far-reaching, igniting the imagination of AI enthusiasts and users alike. With personalized LLaMa models running locally, routine tasks could be effortlessly managed by individuals, ushering in a new era of modularization. The concept revolves around a massive model trained centrally, which is then fine-tuned and customized by each user on their personal data, resulting in a highly personalized and efficient AI assistant.

The vision of having a personalized LLaMa model assisting individuals with everyday matters holds immense potential. By localizing the model on personal devices, users can experience the benefits of powerful AI while maintaining control over their data. This localization also ensures rapid response times, enabling swift and seamless interactions with the AI assistant.

The combination of massive model sizes and efficient inference on specialized hardware paves the way for a future where AI becomes an integral part of people’s lives, providing personalized assistance and streamlining routine tasks.

Advancements like these bring us closer to realizing a world where AI models can be tailored to individual needs and run locally on personal devices. With each user having the ability to refine and optimize their LLaMa model based on their unique data, the potential for AI-driven efficiency and productivity is limitless.

The achievements witnessed in the LLaMa model’s performance on the Apple M2 Max chip serve as a testament to the rapid progress being made in AI research and development. With dedicated engineers like Greganov pushing the boundaries of what is possible, the future holds promise for personalized, efficient, and locally-run AI models that will transform the way we interact with technology.

Read more about AI:

Read More: mpost.io

Bitcoin

Bitcoin  Ethereum

Ethereum  Tether

Tether  XRP

XRP  Solana

Solana  USDC

USDC  Dogecoin

Dogecoin  TRON

TRON  Cardano

Cardano  Lido Staked Ether

Lido Staked Ether  Wrapped Bitcoin

Wrapped Bitcoin  Hyperliquid

Hyperliquid  Sui

Sui  Wrapped stETH

Wrapped stETH  Chainlink

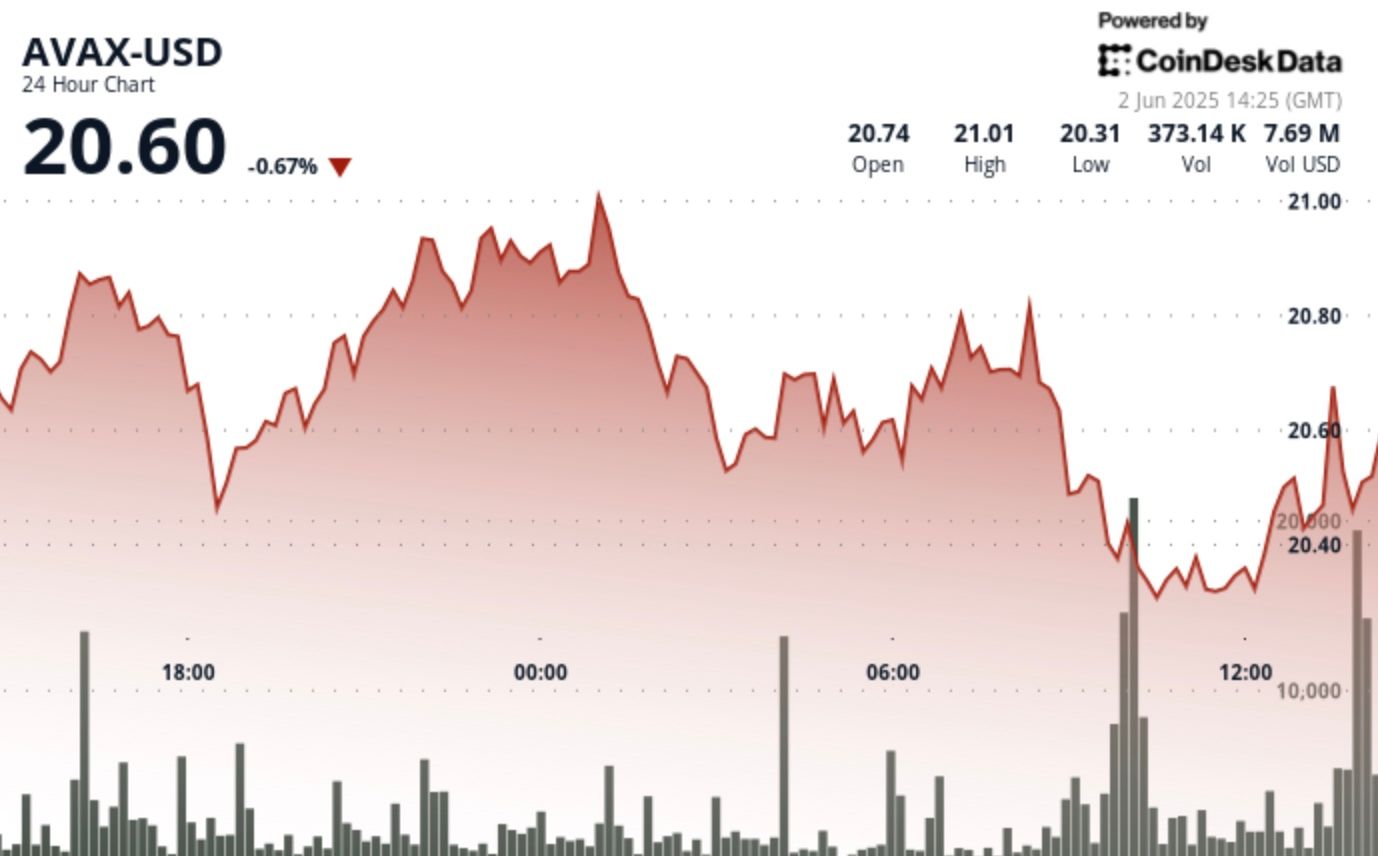

Chainlink  Avalanche

Avalanche  Stellar

Stellar  Bitcoin Cash

Bitcoin Cash  Toncoin

Toncoin  LEO Token

LEO Token  Shiba Inu

Shiba Inu  Hedera

Hedera  USDS

USDS  Litecoin

Litecoin  WETH

WETH  Monero

Monero  Wrapped eETH

Wrapped eETH  Polkadot

Polkadot  Binance Bridged USDT (BNB Smart Chain)

Binance Bridged USDT (BNB Smart Chain)  Ethena USDe

Ethena USDe  Bitget Token

Bitget Token  Pepe

Pepe  Pi Network

Pi Network  Coinbase Wrapped BTC

Coinbase Wrapped BTC  WhiteBIT Coin

WhiteBIT Coin  Aave

Aave  Uniswap

Uniswap  Dai

Dai  Bittensor

Bittensor  Ethena Staked USDe

Ethena Staked USDe  Cronos

Cronos  Aptos

Aptos  NEAR Protocol

NEAR Protocol  OKB

OKB  Jito Staked SOL

Jito Staked SOL  BlackRock USD Institutional Digital Liquidity Fund

BlackRock USD Institutional Digital Liquidity Fund  Internet Computer

Internet Computer  Ondo

Ondo  Ethereum Classic

Ethereum Classic