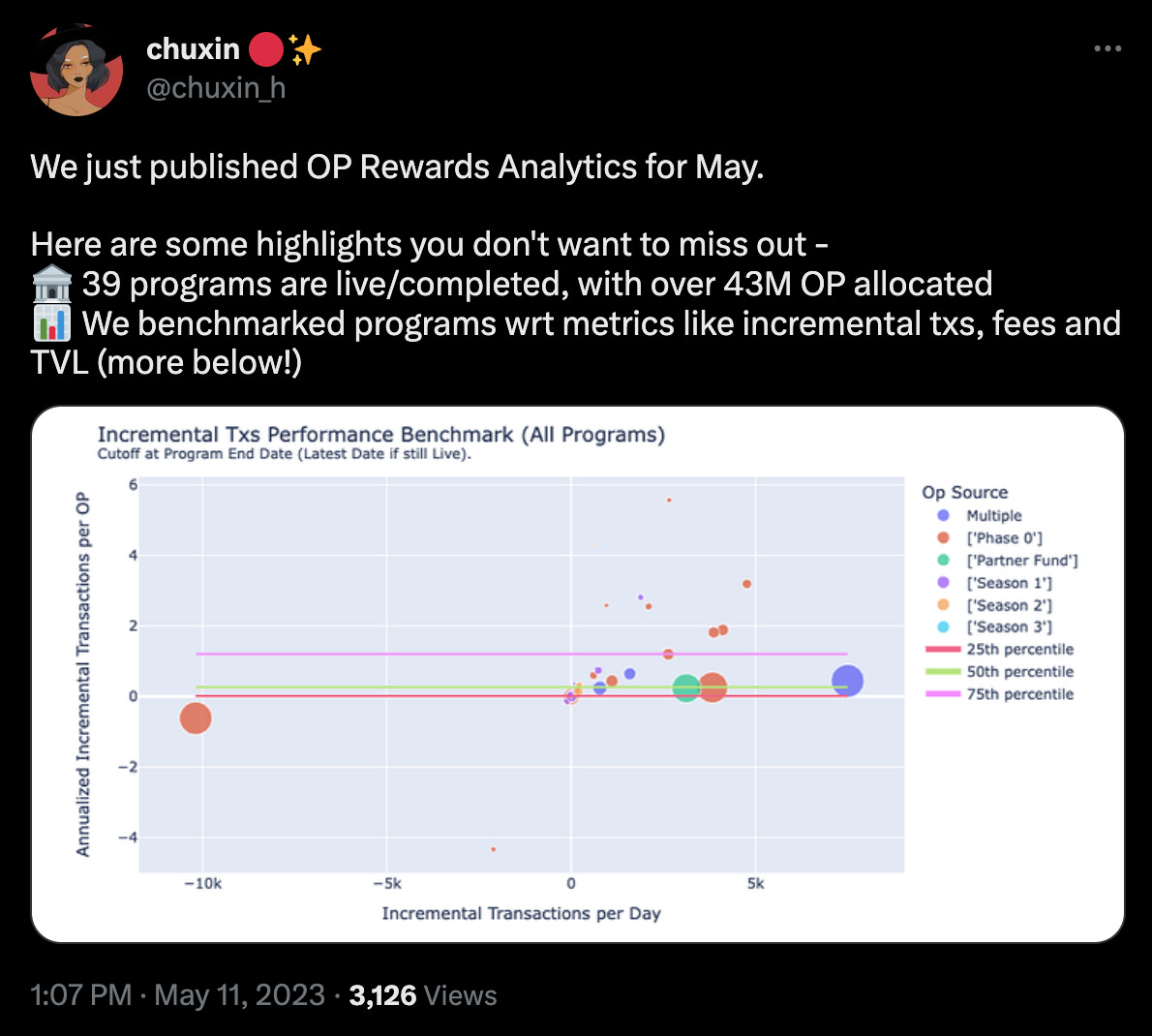

As I was on the blue bird app last week I came across the Optimism’s team breakdown on how they’re thinking about their airdrops/liquidity incentives in a very data-driven and scientific way (props to them!). Looking through it, I knew I had to take a deeper look in and better understand what was going on considering they’ve done some amazing in-depth work. Link to the Twitter thread here.

Before you understand any data it’s critical to actually understand what the data is describing. I could be partially wrong here since I’m not involved with the OP team in any way. This is my best attempt based on what I could gather from Twitter, governance forums, Dune dashboards, Github and Jupyter notebooks. Okay moving past disclaimers.

I started my journey at understanding through the latest governance forum that describes how OP has basically been running “growth”-like airdrop experiments over the past few months then meticulously understanding how each one performed. They’re probably the first project that has actually taken a measured approach like this is which is great because it confirms or disproves thesis’ we’ve all had about how airdrops and growth in crypto work.

To make sure I could maximise my understanding, I started at the very first post from March over here. The methodology that is used for these experiments looks a little something like this:

-

Allocate OP tokens to projects to distribute them to end users (which I think is the right way of doing it).

-

Track how much OP was allocated and how much was deployed by the project in a program

-

Understand what base metrics such as dollar value of net flows, gas fees and transactions looked before the experiment and then what they look like after the experiment

This allows the team to then construct a table that looks a little like the below for each program that is run.

We’ve already got a few months worth of data which is helpful to start drawing conclusions. I’ve written in previous posts about the problems of airdrops so none of this will be new but rather confirming evidence (fingers crossed avoiding confirmation bias here).

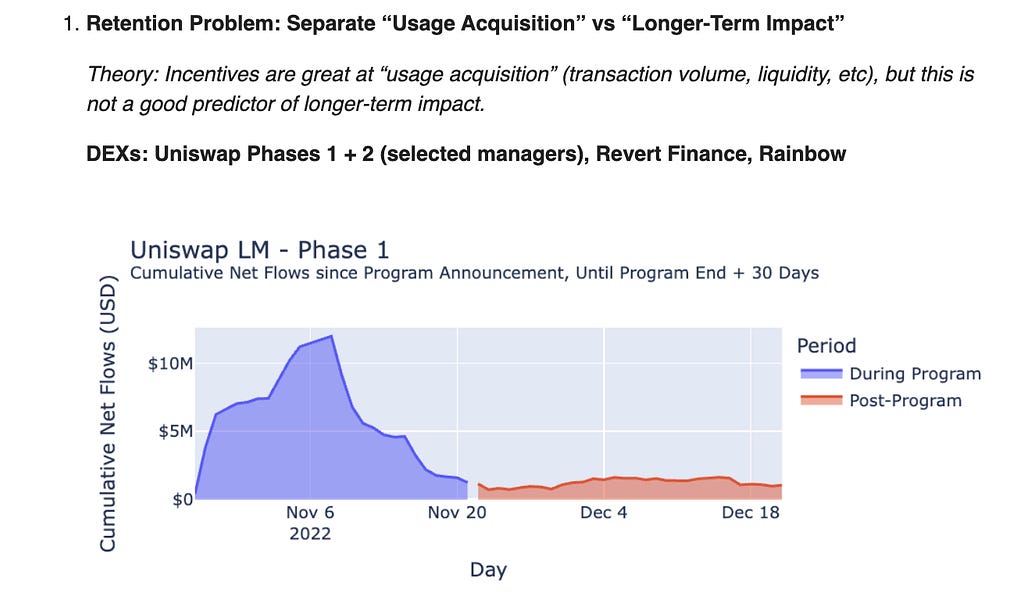

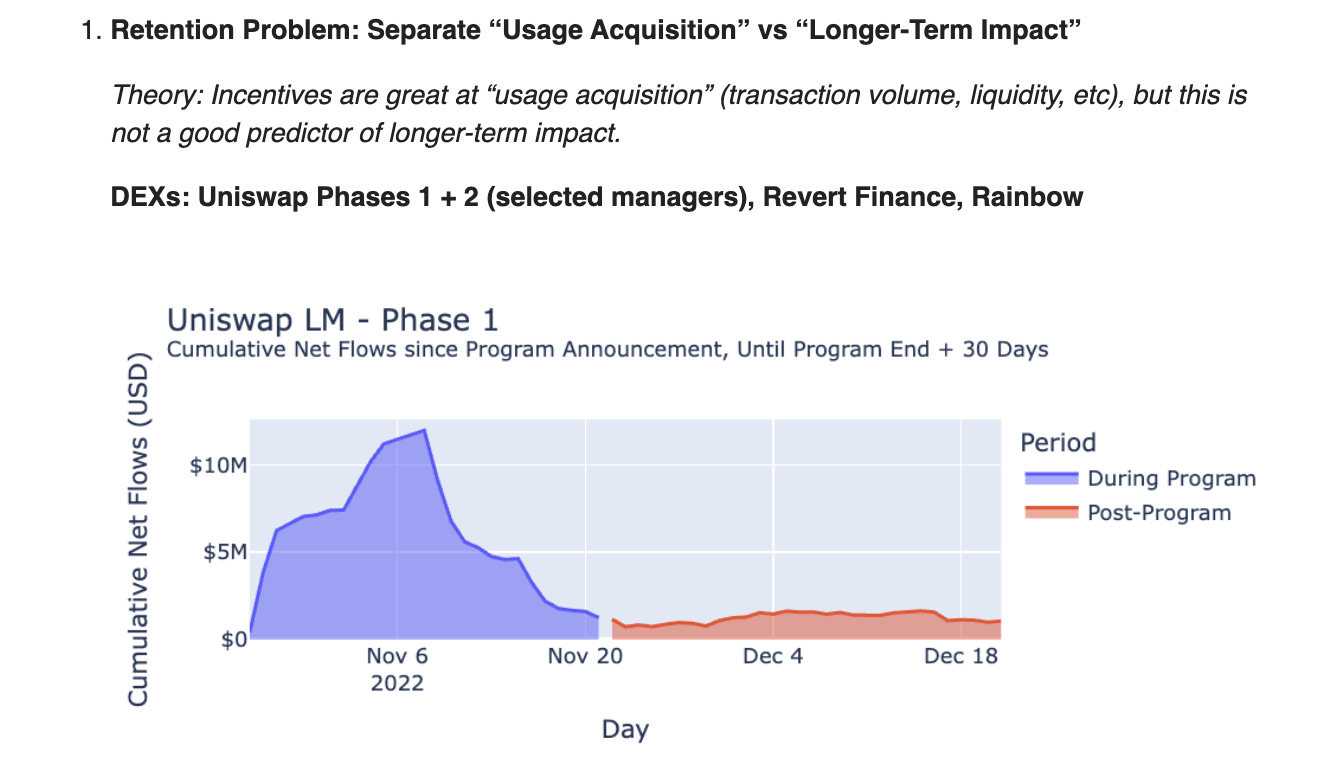

The easiest and most obvious thesis in this whole thing, airdrops are ineffective at solving the thing they incentivise due to low retention. We’ve already got data from this on past airdrops but seeing it play out with liquidity incentives makes it painfully clear. In the screenshot below we see how incentivised DEX usage drops off a cliff after the free money finishes. Also, mind you, this is only a timeframe of 60 days! If the D30 retention is so poor, D90 is probably not going to look better.

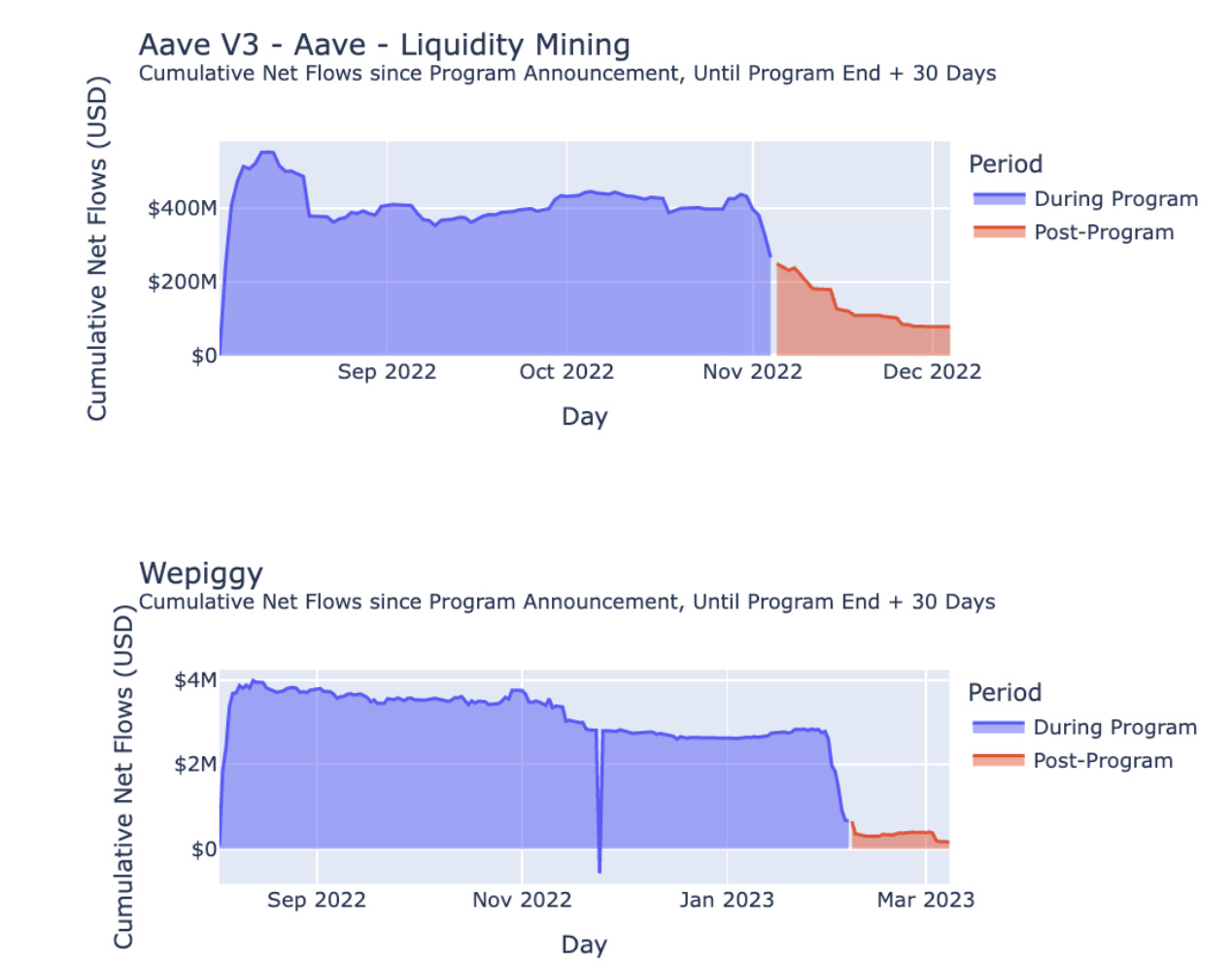

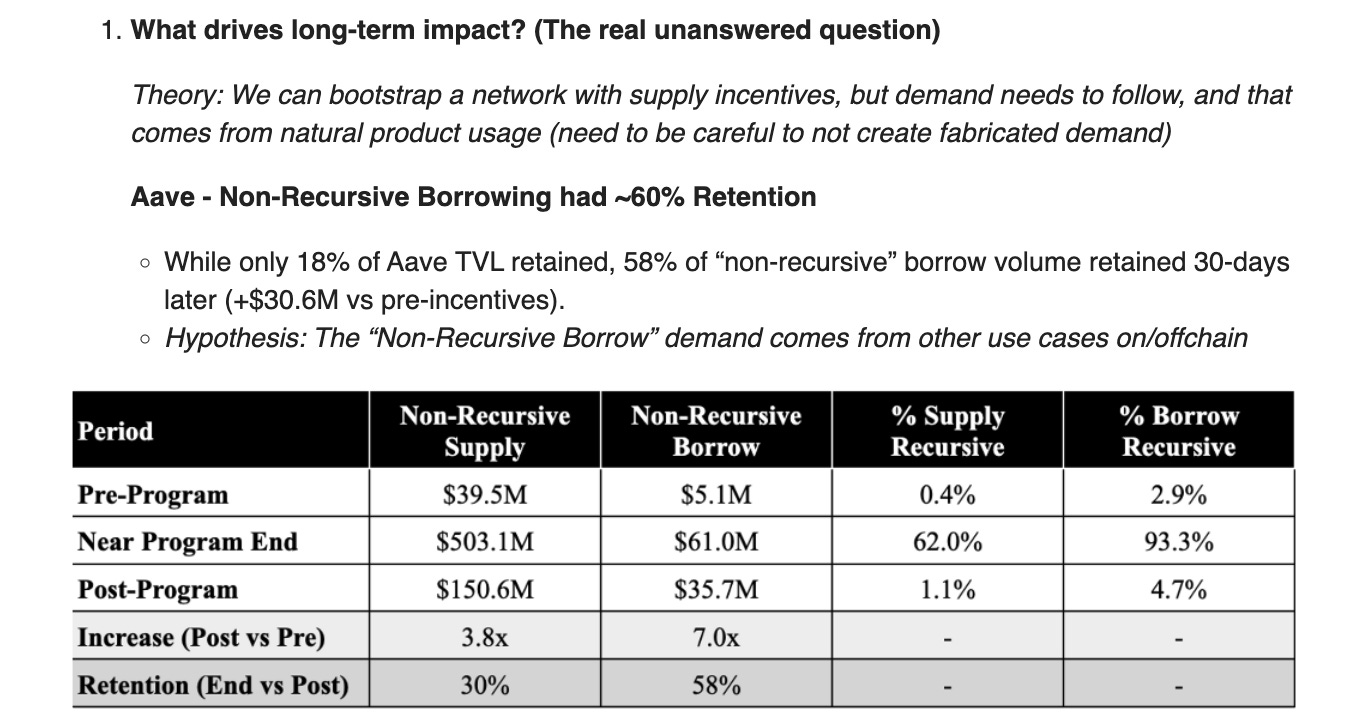

What’s interesting is that they did the same with lending/borrowing markets such as Aave and WePiggy (never heard of them). Aave had more stickiness (18% D30 retention) compared to WePiggy which had 6% D30 retention. I’d love to see what the D90 of these numbers look like because that’s the true indicator in my view.

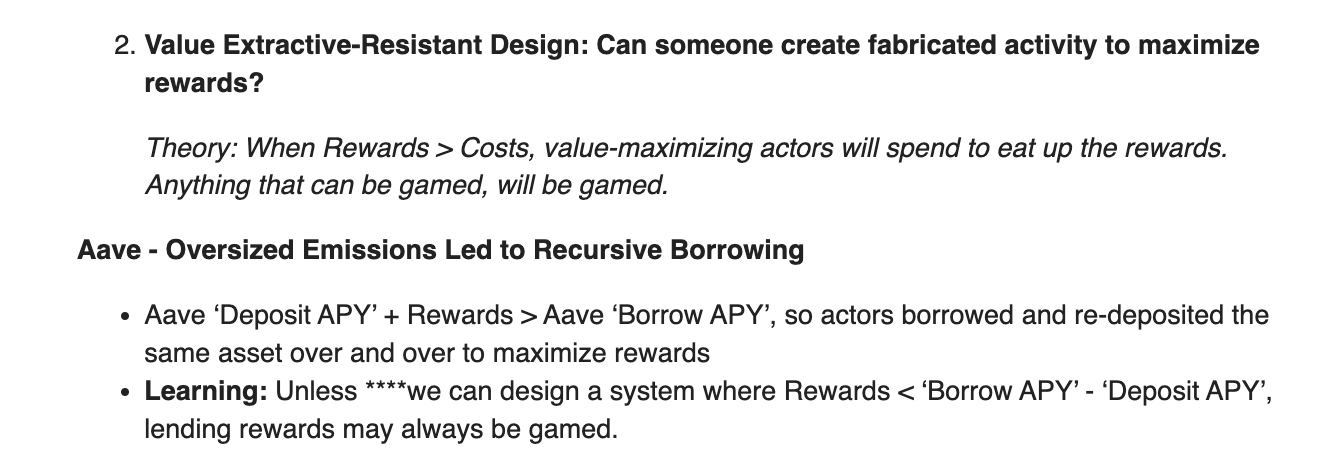

I’ve written about this in the LooksRare piece where essentially you need to ensure your LTV/fees can help you capture/make up for the rewards/incentives you pay out to users otherwise you’re loss leading in a non-sustainable or non-effective way.

The OP folks also came to this conclusion and put it as “when rewards exceed costs, the system shall be gamed”.

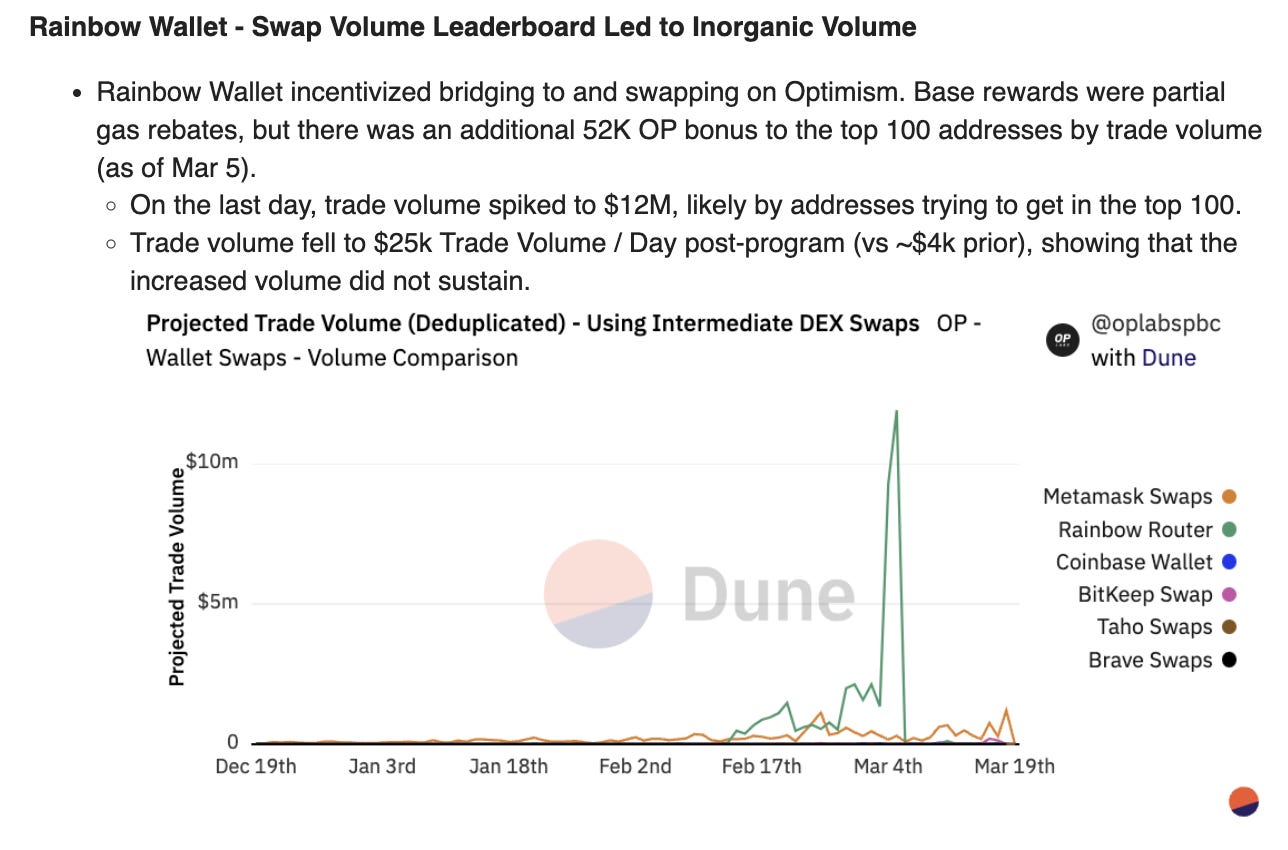

To make this more concrete, they refer to Rainbow wallet which had an incentivised swap volume leaderboard that more or less got gamed since it was so trivial to do so. This is a common problem with many “growth” programs that we see in crypto. Making number go up is trivial, but keeping up-number is a much more difficult problem.

As I was reading it I was thinking of the LooksRare article I wrote. Funnily enough, the OP team also references it!

With LooksRare and X2Y2 introducing tokens rewards for trading, we’ve seen a significant increase in NFT wash trade volumes (58% of the NFT secondary volume was wash trading in 2022).

Wash trade volume may disappear once the incentives become less attractive or profitable for traders (starting Sep 2022).

I hadn’t come across this term when talking about airdrops but I think it’s a really good one to talk about what we’re all trying to do here: incentivise real usage & growth that sticks around after the free money had ended.

This is where genuinely good and useful products win: they’re just much better than the financial engineering littered on top of competitors. The best product should win but unfortunately in crypto the incentives are clearly skewed to the wrong values.

What’s great about getting the answers to these questions through numbers and data we can start developing benchmarks and useful comparison guidelines which everyone can rally around. My original intent for this article was to get into the actual numbers but explaining the methodology ended up taking far longer than I anticipated (Substack article limit length as well). In the next part of this article I want to touch on the actual numbers that the OP team has calculated after more experiments and some deeper learnings that have come from more iterations of doing these airdrops.

Until then, feel free to join in the discussion over here: https://t.me/+T-XpBMSS1ylUz0ej

Read More: kermankohli.substack.com

Bitcoin

Bitcoin  Ethereum

Ethereum  Tether

Tether  XRP

XRP  Solana

Solana  USDC

USDC  Dogecoin

Dogecoin  TRON

TRON  Cardano

Cardano  Lido Staked Ether

Lido Staked Ether  Wrapped Bitcoin

Wrapped Bitcoin  Hyperliquid

Hyperliquid  Sui

Sui  Wrapped stETH

Wrapped stETH  Chainlink

Chainlink  Avalanche

Avalanche  LEO Token

LEO Token  Stellar

Stellar  Bitcoin Cash

Bitcoin Cash  Toncoin

Toncoin  Shiba Inu

Shiba Inu  Hedera

Hedera  USDS

USDS  WETH

WETH  Litecoin

Litecoin  Wrapped eETH

Wrapped eETH  Polkadot

Polkadot  Monero

Monero  Binance Bridged USDT (BNB Smart Chain)

Binance Bridged USDT (BNB Smart Chain)  Ethena USDe

Ethena USDe  Bitget Token

Bitget Token  Pepe

Pepe  Coinbase Wrapped BTC

Coinbase Wrapped BTC  Pi Network

Pi Network  WhiteBIT Coin

WhiteBIT Coin  Aave

Aave  Uniswap

Uniswap  Dai

Dai  Ethena Staked USDe

Ethena Staked USDe  Bittensor

Bittensor  OKB

OKB  Internet Computer

Internet Computer  Aptos

Aptos  NEAR Protocol

NEAR Protocol  Cronos

Cronos  BlackRock USD Institutional Digital Liquidity Fund

BlackRock USD Institutional Digital Liquidity Fund  Jito Staked SOL

Jito Staked SOL  Ondo

Ondo  Ethereum Classic

Ethereum Classic